Automated testing

- We are great at testing our own theories, but often far too late at soliciting external input (whether from other stakeholders or data from sources we don’t interface with day-to-day – eg. getting a manufacturer quote)

- As the design process goes on, the availability for thorough testing decreases, so often, later changes can go unvalidated and have a disproportionate likelihood of causing issues

- We tend to overvalue the immediate results of tests and undervalue standardizing those tests to be repeated in the future (i.e. short-term gain, vs long-term investment)

- We tend not to think of the results of tests as part of the documentation & communication process

As I progressed in my hardware engineering career I became more interested in how software engineering teams are able to qualify iterative changes across a massive design space (often 10k, 100k, or millions of lines of interdependent code). One of the first things I found was a firm reliance on automated testing in any large code-base. Often called “continuous test and deploy” or CI/CD, it’s the backbone of any large code-base, so much so that it’s even produced an entire development philosophy called test-driven development (TDD), which involves first building a test to qualify an issue or feature, THEN developing the solution that meets the need. Even I’ll admit, there’s a bit of an endorphin hit when getting a ✅ after significant development work (we all need external validation, even if it’s from a robot).

Reflecting on this culture of distributed and automated testing, I’ve found it directly addresses each of the biases I listed above. Whether or not a team subscribes to full test-driven development, these tests are often written early in the design process and trigger a re-run each time the design changes (this is the “continuous” in “continuous test”). This may seem obvious, but it does a few critical things:

- It front-loads the test development, meaning that we can very easily test our late-stage designs against criteria that we specified early in the process when there was more time

- In the same vein, it moves some of those late-stage questions to earlier in the design process, like what kind of lead-time we can accept for parts or which components need specified manufacturers

- It promotes communication early in the design process

My favorite outcome of these kinds of automated tests is that they are self-documenting. By building an automation, committing it to the project, and including the test & output in the design review, it preemptively answers many of the questions that would otherwise be asked. I find it significantly easier to review a hardware design that has tests included.

In one of my recent discussions with an engineering team, one of the lead designers mused that any time they catch an issue in a design they can add it to their test suite for the benefit of junior engineers. That’s the exact mentality hardware teams should consider – you recognize that you are not only building tests for yourself, but for everyone around you. By including these automations in templates, you can include them in new projects by default so that the investment doesn’t only benefit you throughout a current design, but through multiple designs, as it snowballs to a shield against all of those pesky, repeatable errors that can sneak in.

What tests can be added?

There are a number of tests that can be added, depending on your needs as a company/team. We detail some of them on our actions page.

In this case, I will demonstrate a relatively straightforward, and very powerful segment of testing, centered around component validation.

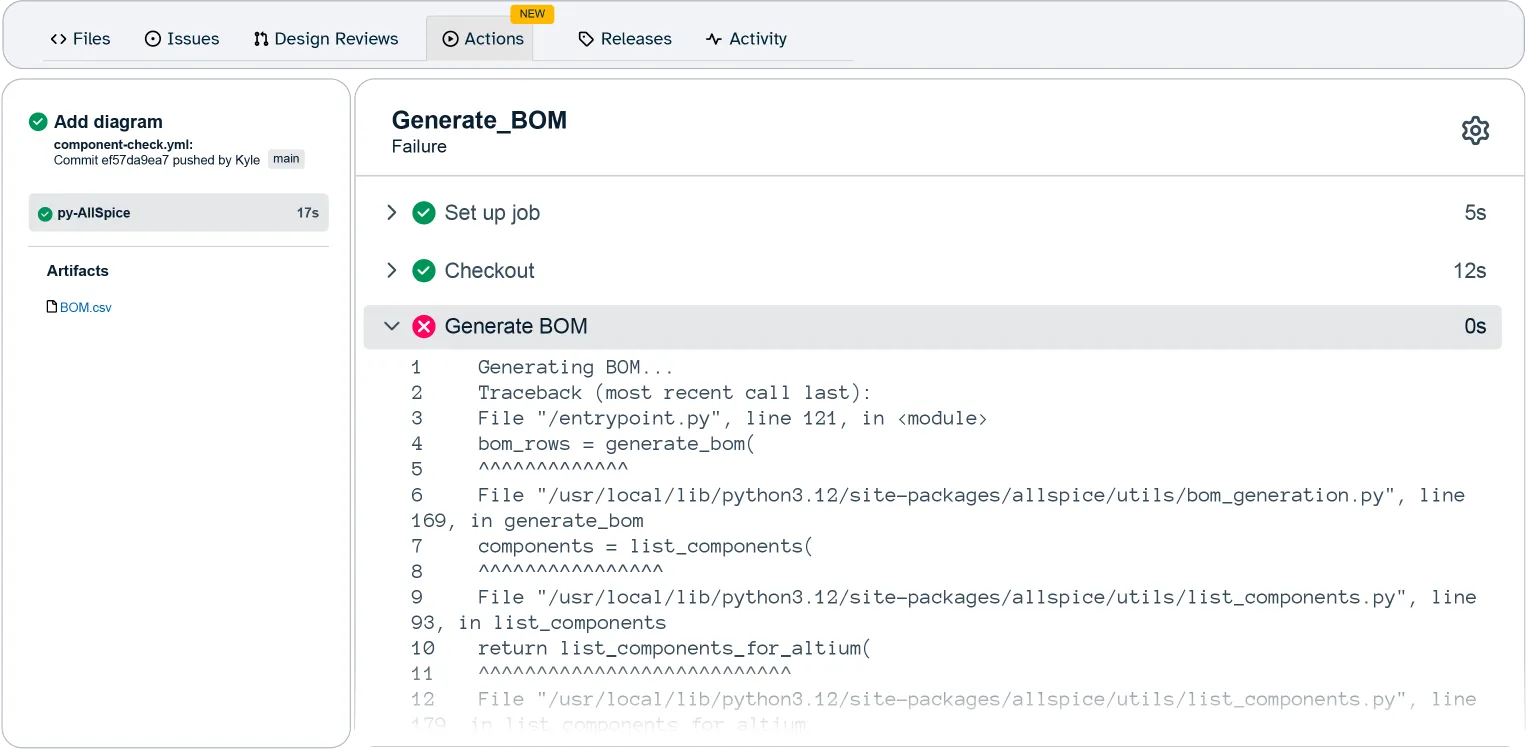

In this example, I’ve written a test series for my project that:

- Triggers on every design review and every change to the design during that review

- Generates an updated bill of materials (BOM)

- Checks each of the components in the hardware design to ensure attributes are properly formatted

- Generates a report in the design review

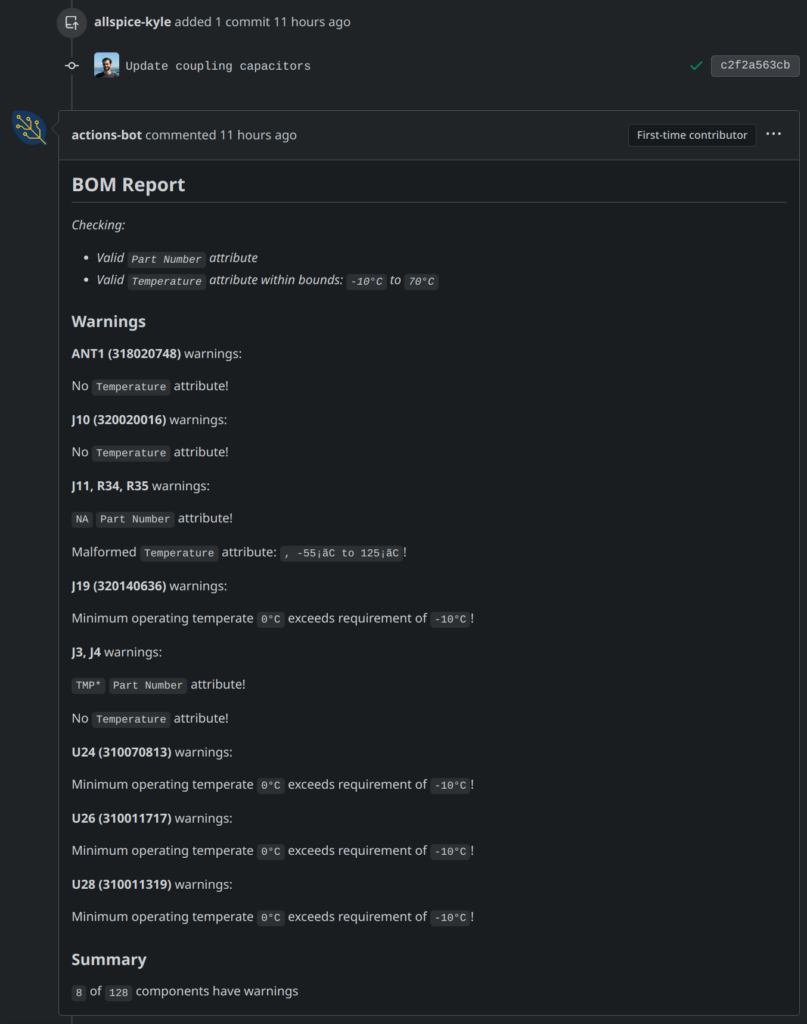

You can see the example here, in our component check demo.

As you can see, I’ve added checks for temporary or missing part numbers and regression test against temperature specification, as well as a summary at the bottom. In my case, I’ve made these checks optional and issued a warning in this report rather than failing the test outright.

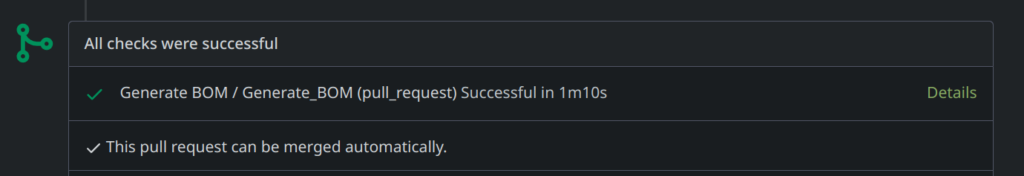

This is entirely configurable – a more strict option would be to fail if any components don’t match my requirements or set a threshold allowing for some number of warnings. Then, merging the design will be blocked until those errors are resolved.

My suggestion is to implement these incrementally. First, check for missing attributes that could hinder your operations teams in finding an available part. Then, add additional tests over time, like temperature, RHOS, manufacturer part number, etc. You can even connect to additional data sources, like your PLM, or an external data provider, like Digikey, to check for part availability or verify attributes against public data.

Having this test, it’s easy to see how we’ve removed an entire category of manual checks that would need to be performed multiple times throughout the design process. Investing in these early and incrementally can profoundly impact the late stage of your project and overall design readiness. Once you’ve implemented component tests, you can move on to even more powerful automations, like generating wire harnesses or validating design rule checks (DRCs)!

Automating component validation tests is one of the many uses cases of AllSpice Actions, and the first iteration has been launched for early adopters. Learn more about how other ways to leverage CI for hardware development work here, and view our API client, py-allspice here.