1. Discover requirements before you add form

Traditional hardware development delays collaboration until a design is “ready.” This defers the most important phase—requirement discovery—until after decisions are already cast in copper.

That’s like building a house before talking to the homeowner.

Version control flips this script. By enabling source-level sharing from day one, design conversations happen earlier. Requirements emerge before they’re accidentally locked into form. You don’t just move faster—you move smarter.

2. Big O Notation: The cost of screenshots

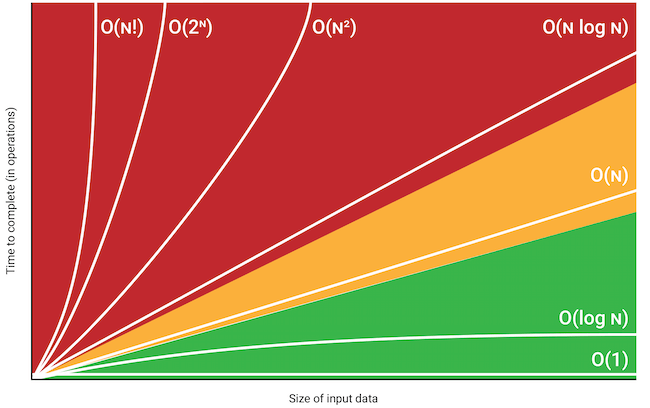

Big O Notation is a mathematical way to describe how the time or space complexity of an algorithm grows as the size of the input increases. It helps us compare the scalability and efficiency of different approaches, especially in collaborative workflows where effort and coordination grow quickly with team size and project complexity.

Here are some common classes of Big O complexity:

- O(1): Constant time. The operation doesn’t change in duration regardless of input size.

- O(log n): Logarithmic time. Common in algorithms that repeatedly divide the input in half (e.g., binary search).

- O(n): Linear time. Work grows directly with input size.

- O(n log n): Linearithmic. Often seen in efficient sorting algorithms like mergesort.

- O(n²): Quadratic. Each item may require comparison with every other item—common in naive comparisons or coordination-heavy processes.

- O(2ⁿ), O(n!): Exponential or factorial. Extremely inefficient. Every added unit increases cost dramatically.

Let’s apply this to two review strategies in hardware workflows:

Option B: Engineers push source files to a version control platform.

Option A: Engineers export and email screenshots.

With screenshots, each reviewer must reconstruct context, mentally diff changes, and guess at intent. Every additional person and screenshot increases the complexity of communication superlinearly, especially when multiple reviewers provide conflicting or redundant feedback.

With version-controlled source files, changes are atomic and structured. Reviewers can comment on exact lines, compare diffs automatically, and understand the context without additional meetings. Effort grows linearly as the number of changes or reviewers increases. The system scales cleanly, with minimal coordination tax.

Understanding Big O helps teams quantify the hidden costs of poor collaboration patterns—and motivates the shift to more scalable, efficient design practices.

3. Entropy: Fight chaos with physics and information theory

In thermodynamics, entropy is the number of ways a system can be arranged—its microstates. In collaboration, high entropy means more ambiguity, miscommunication, and duplicated effort.

Version control is entropy control. It constrains microstates to a shared, auditable history.

In information theory, Shannon entropy quantifies uncertainty in a message:

H(X)=−∑p(x)log2p(x)H(X) = -\sum p(x) \log_2 p(x)

When reviewers receive vague screenshots or partial context, the number of possible interpretations increases, raising entropy and the likelihood of misunderstanding.

Structured commits with diffs and comments reduce ambiguity and increase signal-to-noise. You get low shannon entropy and low thermodynamic entropy: clear signals and fewer file permutations.

High-entropy communication leads to exponential costs. Low-entropy versioning keeps your team in sync with linear effort.

3a. Entropy in mechanical work and engineering systems

Entropy also plays a central role in mechanical work and energy efficiency:

- Mechanical work and thermodynamic entropy: In classical thermodynamics, entropy quantifies the portion of a system’s energy that is unavailable for doing useful work. As entropy increases in a system (e.g., through heat dissipation or friction), the amount of energy that can be converted into mechanical work decreases. This is governed by the Second Law of Thermodynamics and is foundational to engines, power generation, and heat pumps.

- Shannon entropy and control systems: In robotics, automation, and cyber-physical systems, Shannon entropy informs how much information is needed to control or stabilize a mechanical process. Lower entropy inputs lead to more predictable outcomes, which is critical for fine-tuned control loops in feedback systems.

Whether optimizing fuel combustion in a turbine or managing signal-to-noise ratio in an autonomous robot, both forms of entropy are crucial to minimizing waste and maximizing efficiency.

Numerous scientific and industrial advances have been driven by a dual understanding of thermodynamic and Shannon entropy:

- Data compression & transmission (e.g. ZIP, MP3, 5G): Shannon entropy defines the theoretical limit of compression; thermodynamic entropy drives energy efficiency in data transmission.

- Cryptography: Shannon entropy ensures keys are unpredictable; thermodynamic models inform physical security like side-channel resistance.

- Machine learning: Cross-entropy loss functions (Shannon) guide learning; thermodynamics-inspired techniques like simulated annealing escape local minima.

- Video & audio codecs (e.g., H.264, AV1): Shannon entropy determines symbol encoding efficiency; hardware decoders are optimized for thermal and power constraints.

- Quantum computing: Shannon entropy quantifies information content; thermodynamic entropy governs the feasibility and energy cost of quantum operations.

- Biological systems & synthetic biology: DNA encoding and transcription follow both information-theoretic efficiency and thermodynamic viability.

- Modern CPUs & storage systems: Shannon entropy informs cache design and prediction; thermodynamic principles manage heat and energy dissipation.

These fields demonstrate that entropy isn’t just theory—it’s an engineering tool for optimizing clarity, speed, and efficiency across domains. Similar productivity gains are achievable in collaborative engineering and product development when centralized version control is used to reduce duplication, surface signal-rich changes, and manage decision-making structure over time. Just as entropy-aware strategies have revolutionized science and industry, versioning workflows can transform how hardware teams communicate, coordinate, and build.

4. Rework isn’t the enemy—misinformation is

A common objection to early sharing: “It might change.” But even if a file needs rewriting, that cost is still lower than building on wrong assumptions.

Let:

- CrC_r: Cost of rewriting a file early

- CwC_w: Cost of fixing downstream errors based on wrong assumptions

- DD: Delay factor from revalidation, integration, and coordination

Cw=Cr⋅Dwhere D≫1C_w = C_r \cdot D \quad \text{where } D \gg 1

Rework early costs less than course correction late. Version control doesn’t prevent rework—it makes it affordable.

5. Design is cyclical: delay doesn’t eliminate feedback

“Design isn’t a railroad track—it’s a roundabout. The faster you learn to loop gracefully, the less time you spend rebuilding from scratch.”

Why do so many projects stall when they delay collaboration? Because they try to shortcut a process that is inherently iterative.

Design isn’t a straight line—it’s a loop of exploration, feedback, and refinement. Requirements evolve as teams uncover new information. Constraints shift. Assumptions get challenged. Insight rarely arrives all at once—it builds over iterations.

Waiting to share source until things feel “final” doesn’t prevent revision—it just delays the inevitable. You’re not avoiding feedback by staying silent; you’re postponing it to a point where it becomes harder, riskier, and more expensive to act on.

The structure, clarity, and collaborative input you’re avoiding by holding off? They’re not optional. They’re coming eventually. By deferring them, you introduce latency into every future decision, and create a fragile sense of progress based on assumptions instead of alignment.

Early source sharing is an act of bravery in uncertainty. It says: “We know things will change, and we’re better off discovering that now than later.”

Version control doesn’t just make this possible—it makes it sustainable. It lets you loop through versions confidently, track changes transparently, and contextualize decision-making at every step. Instead of fearing the cycle, you use it to your advantage.

6. From abstract to actual: A datasheet diagram story

Let’s make this real. Imagine a hardware engineering team collaborating with an illustrator to create a block diagram for a product datasheet.

Scenario A: The traditional way (high entropy, high cost)

- Engineers email a screenshot.

- The designer recreates it manually.

- Changes come in via a second screenshot.

- Annotations are missed. A wrong version goes into the draft.

- Multiple revisions, emails, and delays follow.

Every step adds ambiguity. Every file copy increases entropy. Every fix increases effort non-linearly.

Scenario B: The version-control way (low entropy, linear cost)

- Engineers export source as SVG or XML and commit to Git.

- The designer builds styles on top of real structure.

- Changes are committed and diffed.

- The designer pulls and updates.

Less rework. Less guessing. One source of truth. And every change is traceable.

This isn’t just theoretical efficiency. It’s a better daily experience for every contributor.

“We used to go through five rounds of screenshots and still end up with the wrong labels. Now, our illustrator just pulls the source and asks better questions. It cut two weeks off our datasheet process.” — Senior Electrical Engineer

7. “It’ll just create more copies”: misunderstandings about sharing source

Some teammates hesitate to share early. They worry:

“If we put this in version control now, it’ll just create more mess, not less.”

Here’s how to reframe that:

More copies happen when you don’t share source.

8. Convincing management: lead with outcomes, not equations

Executives don’t care about Big O or Shannon entropy—but they do care about:

- Shorter time to market

- Fewer bugs in production

- Streamlined audits and compliance

- More productive teams

Here’s how to connect technical practices to business outcomes:

Don’t lead with equations. Lead with:

- Timeline comparisons

- Hours saved

- Defects avoided

- Features shipped on time

Fancy math builds trust with engineers. Business outcomes build momentum with management.

9. Shift left, share source, build better

To summarize:

- Big O shows source-based workflows scale efficiently.

- Shannon entropy reveals why structured diffs improve clarity.

- Thermodynamic entropy explains how version control limits chaos.

- Cost modeling shows early rework is cheaper than late regret.

- Cyclical design proves early sharing accelerates insight.

- Human dynamics prove that source sharing builds stronger teams.

- Business outcomes win over leadership.

Shift left. Share early. Version everything. Let the math work in your favor.